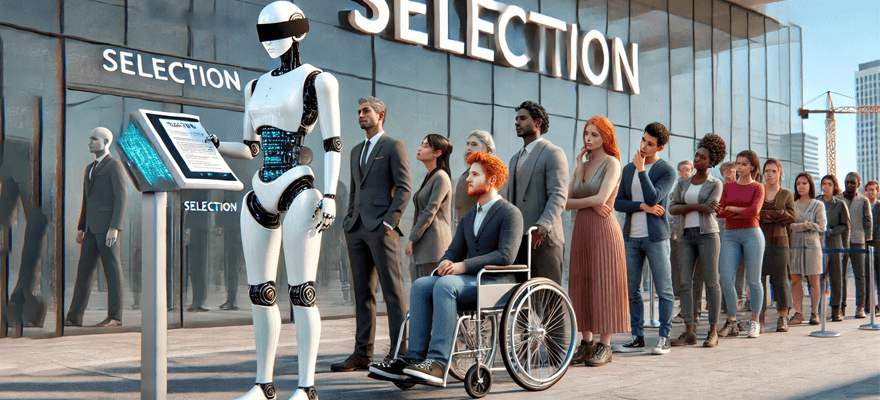

How AI can help HR combat bias and discrimination in the hiring process

As workplaces strive for greater diversity, can we turn to AI to help us remove bias and discrimination? Or does it make the problem worse?

As workplaces strive for greater diversity and inclusivity, bias and discrimination in hiring remain significant challenges. Two large field studies from the Department of Work and Pensions in 2008 and 2009, and the European Project in 2019, show the persistent existence of ethnic discrimination in the hiring process. These studies, spanning across the UK, US, Germany, The Netherlands, Norway, and Spain, highlight the systemic nature of this issue.

Let’s start at screening

Racial bias in the screening process is often at the root of this discrimination, serving as a critical barrier to entry into organizations. In response, companies like Starbucks, Google, and the UK Civil Service have implemented unconscious bias and diversity training programs to address these challenges. While research shows that diversity training can be effective when properly applied, it has limitations, and its impact can diminish over time.

While diversity training is crucial, the advent of AI offers HR departments an additional tool to combat bias and discrimination at the screening stage. By leveraging AI and algorithms, the hope is that human unconscious bias can be minimized or even removed from the hiring process. However, AI is not a flawless solution—many algorithms currently used in applicant tracking systems have been shown to replicate, and sometimes even amplify, the very biases they were designed to eliminate.

For example, even AI tools like ChatGPT, which openly acknowledge their own biases, can unintentionally reflect racial or gender bias because they are trained on data from an unequal world. Developers are aware of this and are working to feed AI systems more representative datasets, but this is a work in progress. Given these limitations, human oversight remains necessary. Within this governance framework, AI can still play a powerful role in helping HR reduce bias in hiring.

Reducing bias in job descriptions

Research shows that job descriptions often contain bias, which can deter or even exclude qualified candidates from diverse backgrounds. Tools like ChatGPT can quickly review job descriptions and identify areas of cultural bias more efficiently than humans. Common areas where bias can occur include:

- Gendered language

- Exclusionary terms

- Excessive educational or experience requirements

- Inflexible working hours or location preferences

- “Cultural fit” criteria that may unintentionally exclude diverse candidates

Once bias is detected, AI tools can suggest more inclusive alternatives, helping job descriptions appeal to a wider pool of applicants. ChatGPT can also explain its methodology and justify its recommendations. When used effectively, these tools can increase job ad response rates and reduce the cost per applicant. However, while AI provides valuable insights, HR professionals retain ultimate control over the final iteration of the job description..

AI-powered blind hiring

Blind hiring, the process of removing information like age, race, gender, and educational background from applications, ensures that hiring managers focus purely on a candidate’s job-specific qualifications. Previously considered too labour-intensive, AI has made blind hiring more feasible by automating much of the process.

However, deciding whether to implement AI-powered blind hiring requires careful consideration. For example, if your data shows that your organisation is already successful in attracting a diverse pool of candidates but struggles to convert them into diverse hires, blind hiring may not improve diversity. Instead, reducing bias in the interview process or final selection decisions might be more effective.

AI can produce bias-free structured interview questions

AI-powered tools can also assist HR in developing structured interview questions that are free from bias, ensuring that all candidates are evaluated on their skills rather than subjective criteria. Additionally, AI-driven interview tools are emerging, which are designed to reduce bias in the interview process. Although these tools show promise, studies, including one by MIT Technology Review, suggest that AI is not yet qualified to make hiring decisions on its own. Instead, AI should be used in conjunction with human interviewers to help identify and address biases.

While AI is not a perfect solution, it offers HR departments a valuable tool to combat bias and discrimination in hiring. From reducing bias in job descriptions to facilitating blind hiring and developing structured, unbiased interview questions, AI has the potential to create a more equitable hiring process. However, it is essential to maintain human oversight and for product developers to continuously improve the algorithms used to ensure fairness and inclusivity. When used thoughtfully, AI can complement existing diversity efforts and help create more inclusive workplaces.